UX Design Case Study: SAFEcar

Overview

I designed a car alarm / monitoring system and accompanying app that will enable people to remotely detect any activity around their car and alert police in the case of suspicious activity does occur.

Role

Sole UX Designer

Skills Used

Data Analysis

User Interviews

Creating empathy maps

Creating Personas

Mind Mapping

Affinity Diagramming

Wireframing in Adobe XD

Designing a mobile app

Designing a conversational UI

Writing Usability Test Script

Recruiting Users for Testing

Usability Testing

Analyzing Qualitative Data

Synthesizing Data into Actionable Findings

Presenting Recommendations

Proposed Design Process

Before starting this project I outlined a design process to help keep this project moving forward.

The Data Inspiring this Case Study

I started my research by looking at 2017 crimes in Austin by zip code. Then, I looked into what kinds of crimes were being committed. Theft and burglary of vehicle dominated the annual crime stats in 2017.

To investigate possible design problems surrounding theft and car burglaries I decided to interview 3 victims of vehicle theft.

Austin 2017 Annual Crime by Zip Code

Austin 2017 Annual Crime by Type of Crime

Source: data.austintx.gov

Conducting User Interviews

My objective for the interviews was to learn about people’s experiences being a victim of auto theft and the process they went through to report the theft, retrieve stolen items, and preventative measures they took afterwards.

After conducting the interviews I analyzed interviewee responses and organized them into empathy maps for each person interviewed.

Empathy Mapping

The empathy maps helped show similarities between users and the frustrations they experienced in the aftermath of their car being broken into or stolen.

Two users expressed how police were pessimistic about finding their stolen items.

Also, I found it interesting how the people I interviewed felt it was partially their fault that their car was broken into.

For example, they felt keeping their spare key above their tire or leaving their belongings in the open led to burglars targeting their cars.

Persona Creation

From commonalities found in the empathy maps, I created Rick as a persona.

I outlined his characteristics, goals, and frustrations he experienced being a victim of car theft.

This persona helped clarify who I was designing for and the problems I would attempt to solve.

Defining the Problem

From the data provided by the City of Austin and my user interviews I narrowed in on a problem facing my user, Rick.

Problem:

People are fearful their car will be broken into and police won’t catch who did it.

Hypothesis:

If people have a way to monitor their car remotely and receive alerts then they will become less fearful of break ins and police will be more likely to catch burglars.

Value Proposition

Keeping my persona in mind I filled out a value proposition for a product that would help Rick monitor his car remotely and receive alerts for suspicious activity.

Filling out the value proposition allowed me to see the benefits of potential product features for Rick and how other potential users, such as the police, could benefit from it too.

Source: strategyzer.com

Outlining Project Themes

ADVISE

Have an alert system in place to warn user when someone is approaching their vehicle. Provide instructions to user if a break in occurs.

REASSURE

Users are concerned with the security of their vehicles. They should have easy access to a live camera feed to check on their cars when feeling worried.

INTEGRATE

Victims of car theft need a way to send video evidence to police and easily fill out a Police report. Ideally the application would connect directly to police department systems.

Based on the proposed values, I outlined some project themes to further clarify my design goals. Outlining these project themes also gave me a chance to brainstorm potential features that could help solve pain points for Rick. As I progressed into designing a Conversational UI I found my project themes also served as a great reference guide for voice and tone.

Establishing the User Flow

Creating a user flow allowed me to map out the steps the user would take to accomplish a task.

Additionally, it revealed alternative flows and what features could be grouped together in the UI.

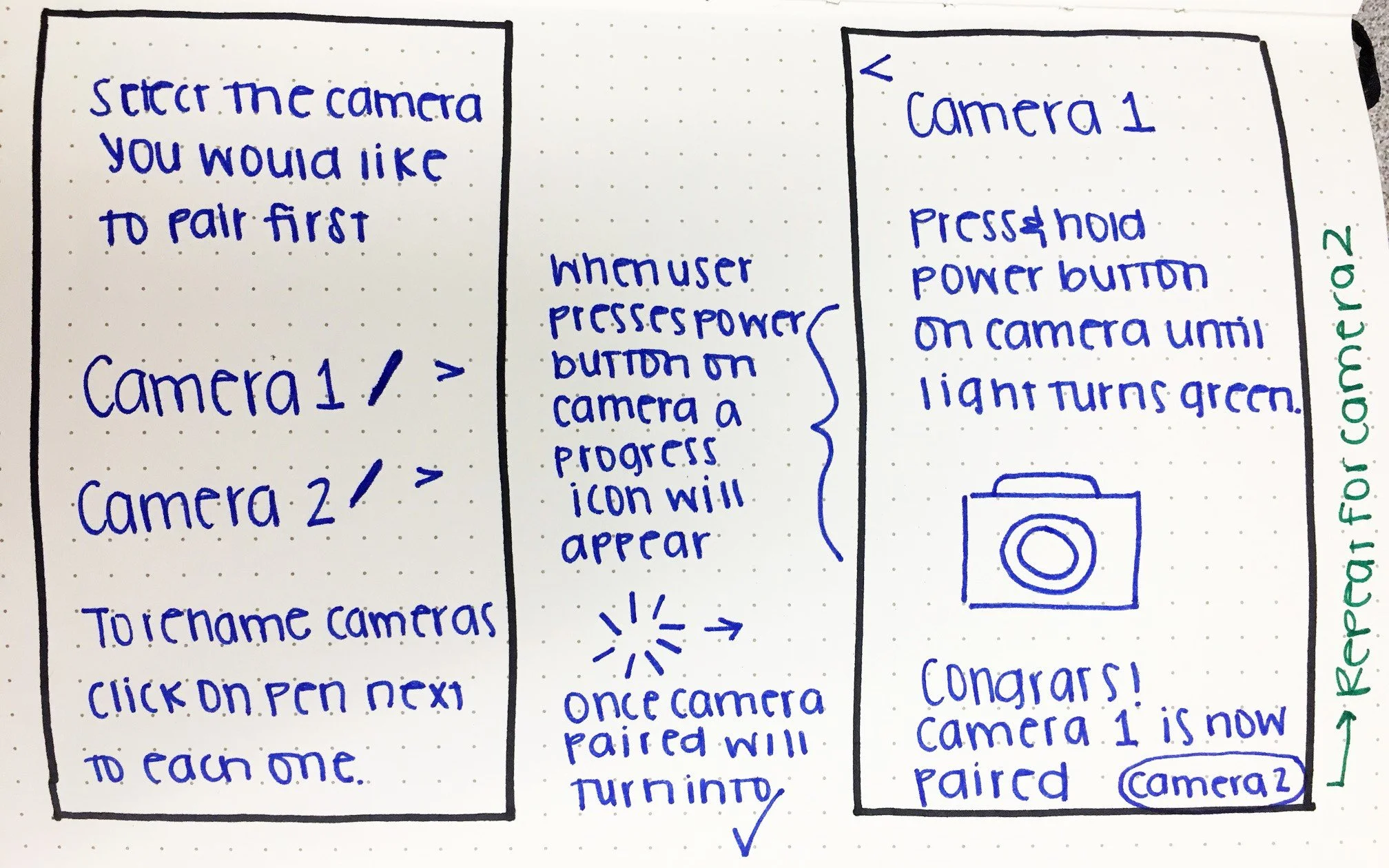

Ideation Sketching

Once I established a user flow, I began ideating in low fidelity so I could work out high level layout and features of the application quickly before moving into higher fidelities.

Based on interviews I knew my user would want to receive alerts and be able to monitor their car at all times so I chose to design a mobile app.

As I moved into high fidelity wireframing I reconsidered what information would be important to Rick when he first opened the app.

So, instead of having an overview of his car I decided to have the first thing he sees be the video feed.

After designing a graphical interface I began to explore if a conversational UI would better meet the user’s needs.

High Fidelity Wireframes

Designing a Conversational UI Solution

Affinity Diagramming

User Utterances

User Intents

Starting out, I wrote out possible utterances a user would say to the conversational UI.

Then, I grouped them by similar user intents or goals.

This illustrated how a conversational UI would be best utilized when the user wants to know what their alert settings are or change the settings.

Mind Mapping

Focusing on the alert settings, I created a mind map of entities the conversational UI would need to recognize and the relationships between them.

The mind map helped me see which entities are dependent on each other

and instances where it’d be important to have the Voice UI ask questions to clarify the user’s intent.

Scenario

Rick is spending the night at a friend’s house and left his car at his apartment. His car has been broken into before and despite making sure it was locked he is still worried someone may try to burglarize or steal his car again. Rick needs a way to remotely monitor his car and receive alerts for suspicious activity around his car.

Conversational Flow Diagram

After exploring a screen and conversational ui I feel the best way to move forward with this design would be a combination of both. I need to include a screen interface so users can see the video feed of their cars. However, integrating a voice UI could decrease the amount of time it takes the user to change their alert settings (i’d have to evaluate this during usability testing). Also, a Voice UI reinforces the reassuring tone I want to convey to users.

Retrospective Following Generative Research

Based on the design process laid out initially, I felt I followed it up until the refine phase.

I did slightly deviate during the design phase because instead of choosing between two potential solutions I decided on using a combination of a screen and conversational UI to solve my user’s problem.

I feel the SafeCar app and voice UI do help reduce Rick’s fear of future car break ins by giving him a way to remotely monitor his car.

Next, I conducted usability testing to determine if any alert settings can be removed or changed and to gather more user utterances for the conversational UI.

Since I focused primarily on the alert settings feature of the app, I failed to address how video evidence could be integrated with police reporting systems to increase the likelihood of catching perpetrators and retrieving stolen items.

Next Steps

Push the screen UI into higher fidelities

Wireframe other features in the app

Explore other scenarios, considering threshold settings

Think about how the conversational UI would assist users in other use cases.

Possibly include a feature that would detect when the user is in a high crime area and automatically change alert settings accordingly.

Integrating the features with existing home security applications

Usability Testing

From my generative research, I created Rick as a persona. I decided to only test users who fit this persona so I chose to test the SAFEcar app with people who have had their car broken into and who were also first time users of the app.

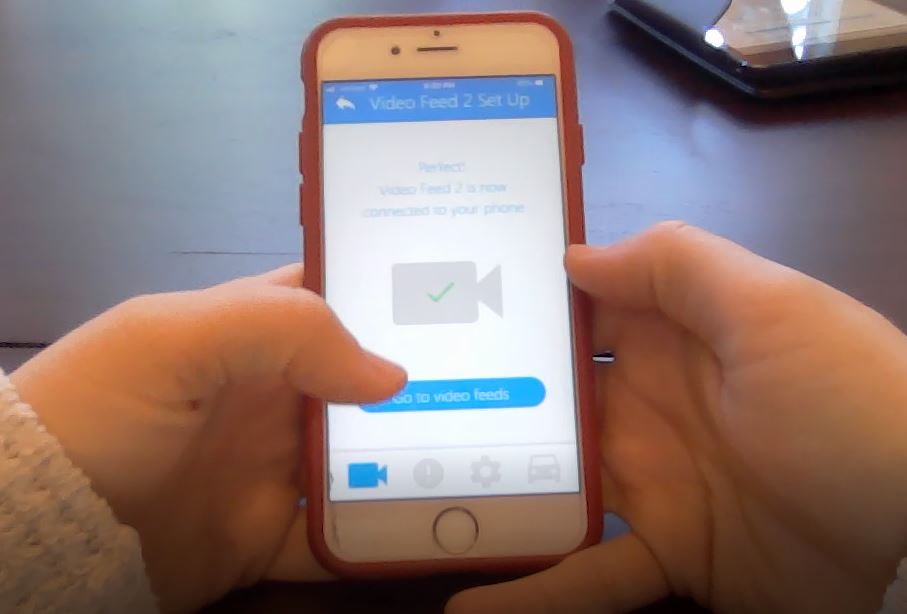

The mobile app is still in development so I decided to test what I had built out which includes initial app set up, the home screen, and the alert settings.

Test Objectives & Hypotheses

OBJECTIVES

Evaluate the navigation

Do users understand what the icons are in the fixed navigation menu?

Can they navigate to the alert settings to change them?

Learn if users can set up video feeds & change alert settings

Do they understand the video feed set up instructions? And can they successfully interact with the video cameras?

Do they understand what each setting changes?

Is it what they expect to see?

Assess user confidence

Does the user know when a change was made?

How confident are they that they correctly performed the task?

HYPOTHESES

Clear instructions will inform users how to set up the video feeds.

Multiple points of entry to the alert settings will better enable users to change alert settings

Clear feedback will increase confidence that alert settings have been changed or updated.

Approach & Methodology

With these three objectives in mind I combined task based evaluation and qualitative discovery into my usability test.

Five in-person usability tests and interviews were conducted over one week. The usability tests and follow up questions were video recorded as participants completed tasks with the SAFEcar prototype on an iPhone.

To measure the learnability of the video feed set up and changing alert settings I used asked target users to complete three tasks:

The first task was to set up the video feeds in the app

Since the app is still in development I did not have video cameras for users to interact with during the set up. To replicate the experience, I substituted a video camera with a bluetooth Bose® Speaker that makes a sound when the power button is pressed and it connects to a device.

The second task was to enable alerts

And lastly, I asked users to change their alert settings.

Because this was the first time I’ve tested the SafeCar app I incorporated qualitative discovery to:

assess if the design met users’ expectations

how confident they were in their actions

and if they understood the iconography in the app

Analysis

Quantitative Data

I used task success tracking with levels of completion to analyze the task based evaluation portion of the usability test.

Qualitative Data

To analyze the qualitative data I collected, I watched the videos of the usability tests and then entered observations into the rainbow spreadsheet. This allowed me to visualize observations between users and see commonalities and outliers.

Findings

After analysis, and reviewing my usability test goals;

I discovered users easily learned how to set up video feeds and change alert settings but struggled to navigate to the settings.

Additionally, users’ confidence in their interactions with the app was high but users also expressed how they would need to interact with the app more to gain confidence in the apps ability to alert them.

Task 1: Video Feed Set Up

Every user started setting up the Video Feed correctly.

Unfortunately, only 3 out of 5 users completed the task successfully without any assistance because of prototype timing errors.

Despite prototype malfunctions, 4 out of 5 users were confident that the video feeds were set up at the end of the task.

Task 2: Understanding of Navigation

Users’ understanding of the navigation was hit or miss

All users correctly identified the settings icon.

However, only 3 of 5 users knew what the notification icon was

and all the users expressed uncertainty about what the car icon would navigate to.

I believe evaluating these features in future usability tests would shed more insights on users’ understanding of the app’s features and the navigation’s iconography.

Task 3: Enable Alerts

3 out of 5 users correctly enabled alerts.

The users who didn’t correctly enable alerts tapped on the alert settings icon.

The users who correctly enabled alerts stated they did so because it seemed like it was the easiest thing or because the toggle button was so apparent on the home screen.

4 out of 5 users did not expect to see “Activity within 6 feet” to pop up when alerts were turned on.

Task 4: Change Alert Settings

Only one user had complete success changing alert settings without assistance.

I expected users would click on the settings icon to increase the alert detection area but 2 users wanted to tap on “activity within 6 feet” first.

4 out of 5 users correctly used the slider bar to increase the alert detection area as I expected. But 3 out of 5 users stated the slider bar did not react the way they expected it would.

On a positive note, all users understood the functionality and found value in being able to set an alert time window and change the way they receive alerts

Recommendations

Tier 1:

Increase prototype timing during video feed set up

Despite it being a prototype malfunction, some users were unable to read the instructions in the amount of time provided during the video feed set up. I listed this as a tier one recommendation because if users cannot set up the video feeds they cannot use the SAFEcar app.

Tier 2:

Make “Activity within 6 feet” clickable and another access point to alert settings

Users were split in how they navigated to the alert settings. True to my hypothesis, giving multiple points of entry or different ways to change alert settings will decrease the number of attempts to get to the settings.

Add labels to navigation icons

Since there was so much confusion surrounding the icons in the navigation menu, I think adding labels will help clarify to users where each icon leads to.

Tier 3:

Make “activity within” slider bar incrementally change

to align with users’ expectations

Add “save” button in settings

so users know they are committing to the setting changes they’ve made